* By Rogerio Pires

* By Rogerio Pires

Artificial Intelligence is transforming the medical landscape, offering unprecedented opportunities to improve diagnostics, treatments and operational efficiency. At the same time, this technological revolution also raises significant concerns about patient safety and the reliability of the information provided by these tools.

Recently, many physicians have started to use AI chatbots like ChatGPT to make clinical decisions. Even without clear guidelines or sufficient regulation, a survey by KFF Health News revealed that 76% of physicians use these tools to check for drug interactions, support diagnosis, and plan treatment. The ease of access and ability to process information quickly make these chatbots attractive to professionals who face heavy workloads and the need to stay up to date.

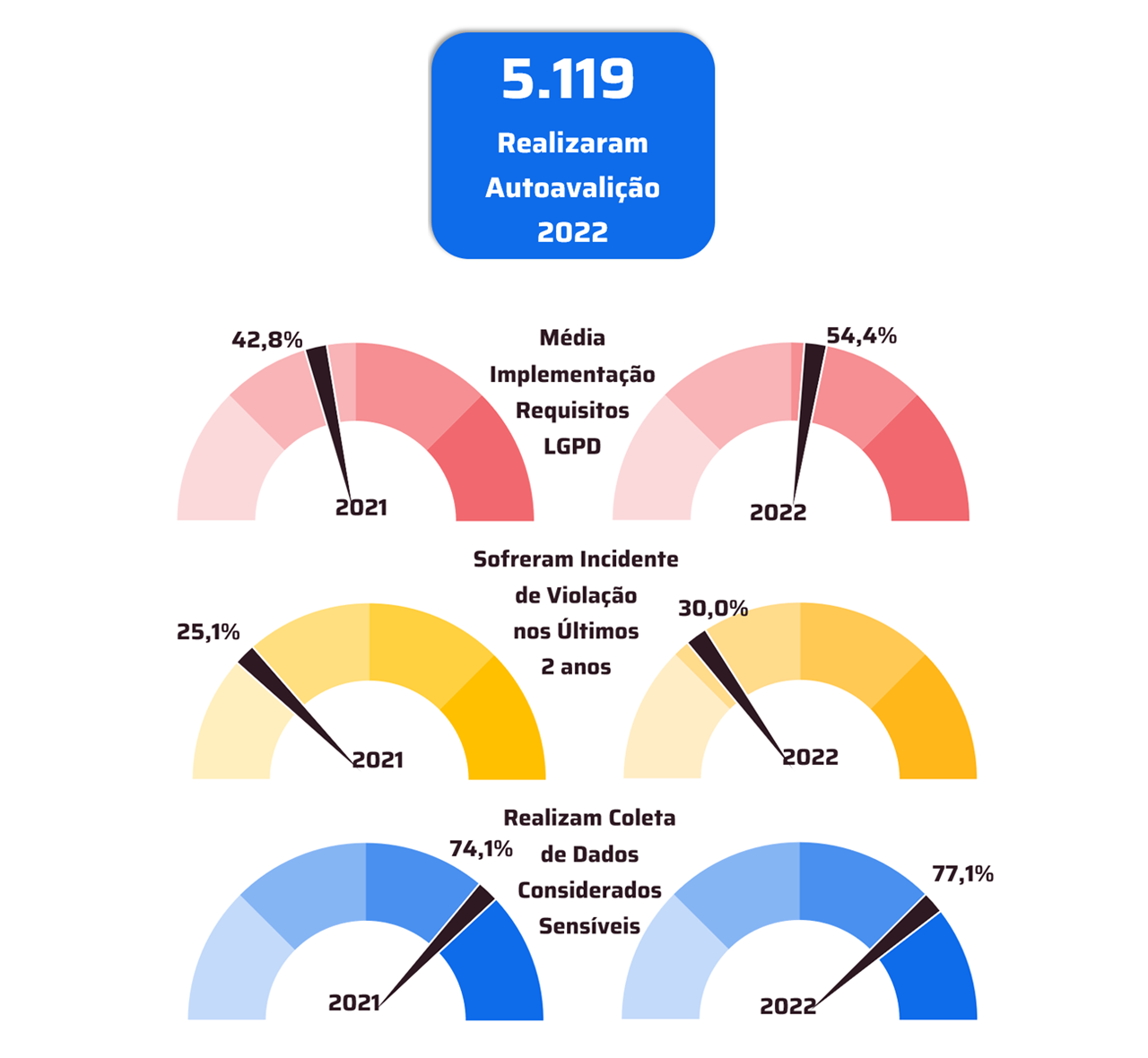

But experts warn of the risks associated with using unverified systems. There are legitimate concerns about the potential for incorrect responses, AI “hallucinations,” and a lack of compliance with health data privacy regulations, such as Brazil’s General Data Protection Law (LGPD). As highlighted in the Fierce Healthcare article, “these models may not know they’re making a mistake, and they can’t tell you,” said Graham Walker, MD, an emergency medicine physician at Kaiser Permanente.

At the same time, AI-powered self-diagnosis has become a growing concern among Brazilian physicians. According to a Medscape survey, 831% of professionals say that patients run risks when using AI for self-diagnosis. The ease of access to public chatbots and AI-powered health apps can lead patients to misinterpret symptoms and delay seeking appropriate medical care.

This complex situation highlights the need for deep reflection on the role of AI in healthcare. AI will always provide an answer, regardless of its veracity. And if it gets it wrong, it will still try to convince us that it is right, which is particularly dangerous. This reinforces the importance of clinical judgment and the need for professionals to be aware of the limitations of these technologies.

Given this scenario, it is essential that the integration of AI into medicine be done responsibly and ethically. This is where it is important to have reliable technology partners that guarantee the quality of the software and the security of patient information. Robust and secure technological solutions already exist, aligned with the needs of the sector and in compliance with current regulations.

By choosing solid technology partners, healthcare institutions can ensure that AI tools are used as allies, enhancing efficiency and accuracy in patient care. This way, it is possible to take advantage of the benefits of AI in medicine, ensuring that technology is at the service of human care and patient safety.

*Rogério Pires, Director of Healthcare Products at TOTVS

Notice: The opinion presented in this article is the responsibility of its author and not of ABES - Brazilian Association of Software Companies