*Per Cassio Pantaleoni

*Per Cassio Pantaleoni

The year 2023, in a way, was the regulatory year for Artificial Intelligence (AI). Back in May, the G7 Summit highlighted the importance of promoting guardrails for advanced AI systems on a global basis.

In August, it was China's turn to enact a law specifically associated with Generative AI, with the aim of mitigating essential harm to individuals, maintaining social stability and ensuring its long-term international regulatory leadership.

In the wake of this process, it was up to the US, represented by its then president Biden, to issue an executive order that was responsible for guiding the application of AI in the field of reliability, security and the protection of the fundamental elements of American sovereignty.

However, the cherry on the cake was, to a large extent, the European Union's AI Act, pre-approved in December 2023 and sanctioned in early 2024. Deeply debated and quite comprehensive, the Act achieves the status of an internationally oriented regulation conceived as a legal framework for the development and application of AI systems for the bloc's member countries.

In Brazil, Law 2,338 on Artificial Intelligence marks a turning point in the regulation of emerging technologies in the country. On a large scale, the law has positive aspects, but it also denotes certain weaknesses in strategic areas for the development of our leadership in the field of AI.

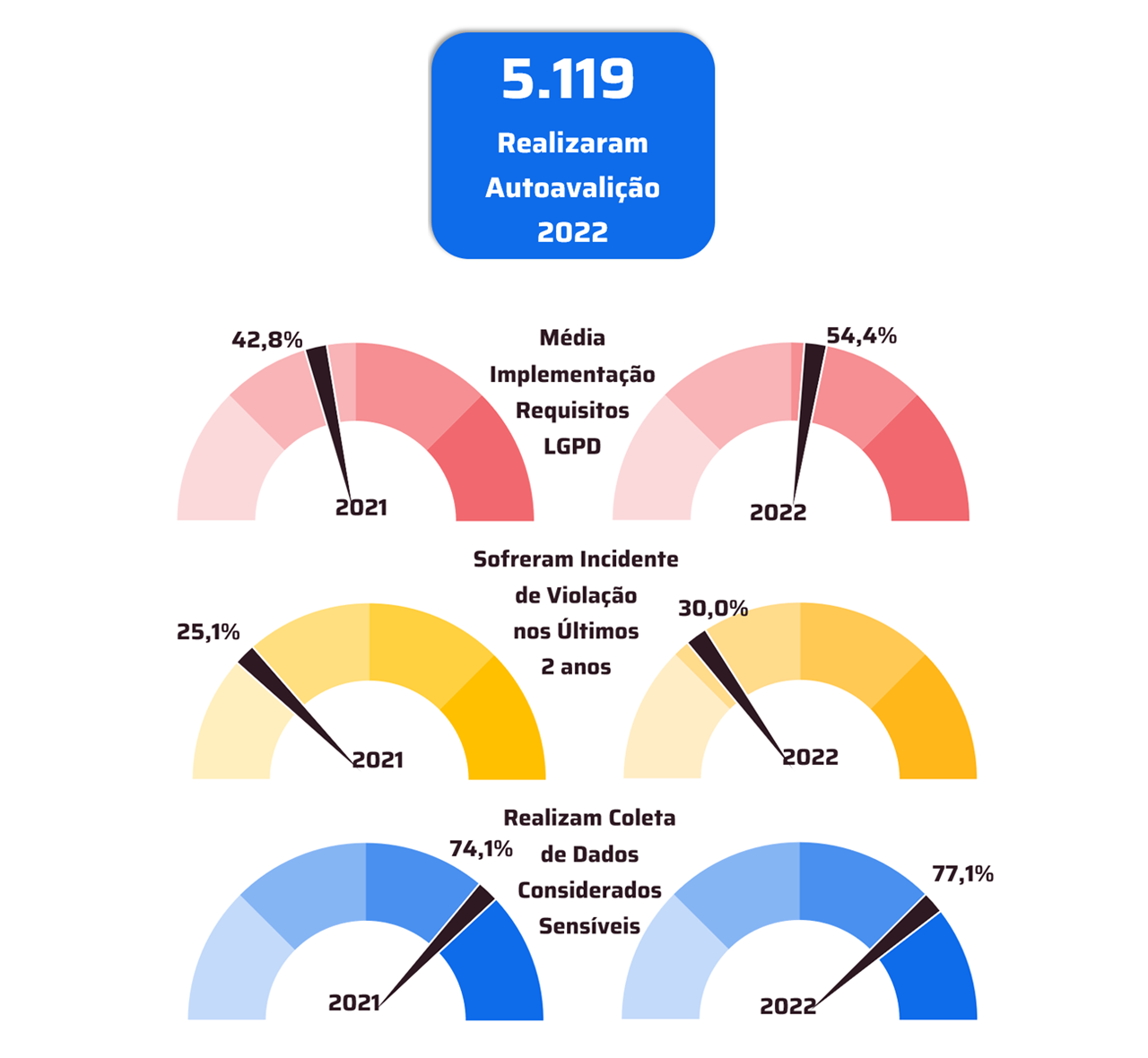

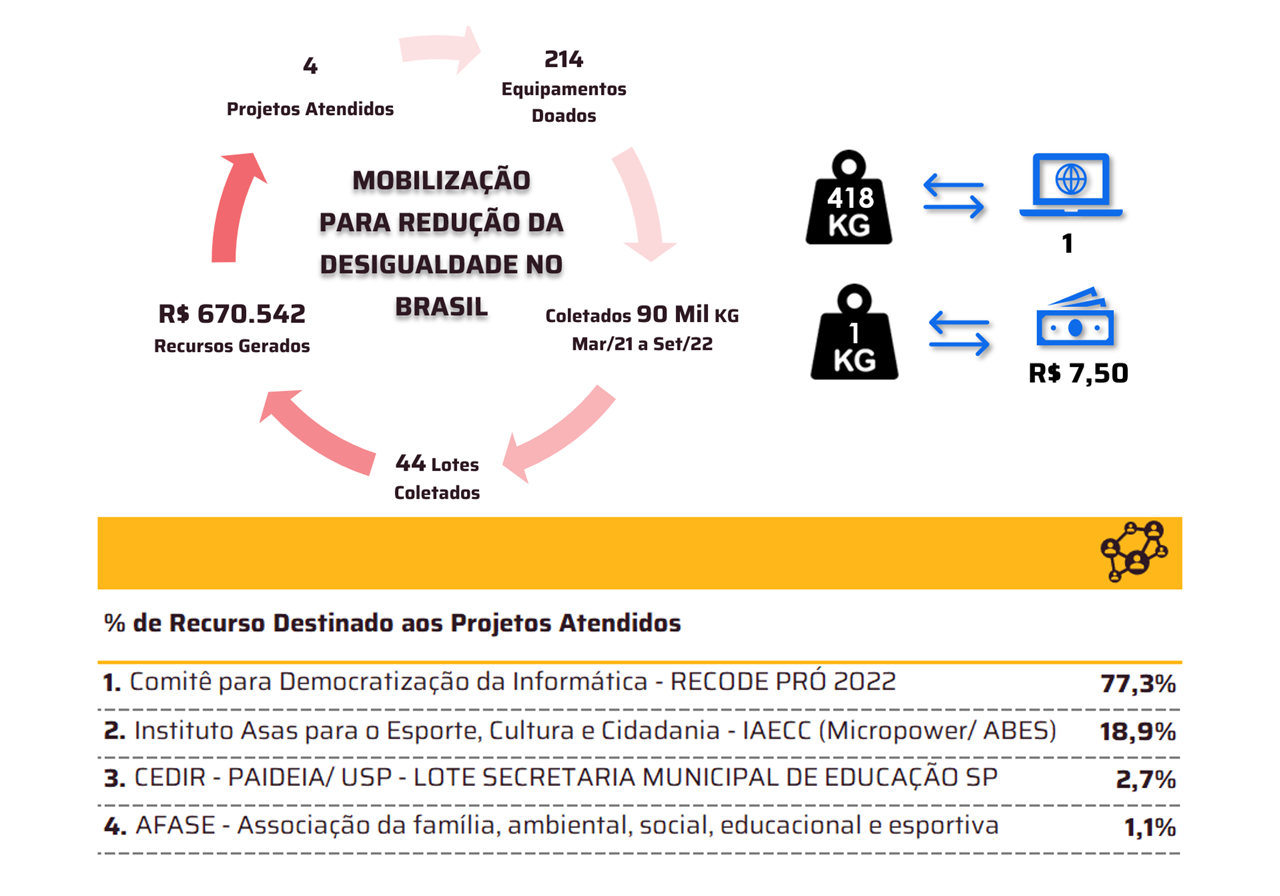

At the heart of Brazilian regulation are provisions from the General Data Protection Law (LGPD), which emphasize the protection of personal data with an emphasis on privacy. The law aims to ensure that AI does not compromise individual rights. The LGPD also seeks to encourage innovation by offering some tax incentives and subsidies to companies that invest in AI research and development. This aspect aims to position Brazil as a hub for technological innovation, stimulating competitiveness and the creation of startups in the AI sector. Regarding social impacts, digital inclusion and the ethical use of AI to reduce inequalities are covered by promoting educational and training programs for vulnerable populations, preparing the workforce for the era of artificial intelligence. The idea is to mitigate the negative social impacts of automation, promoting a more equitable transition.

However, there are negative aspects to highlight. The first of these is excessive bureaucracy, such as the requirement for multiple assessments and certifications that could burden companies – especially startups and small businesses – with additional costs and time-consuming processes. This bureaucratic aspect could discourage innovation and the adoption of new technologies. Although the law has interesting intentions, some critics cite ambiguity in certain provisions, allowing for conflicting interpretations and legal uncertainty. There is a lack of clarity regarding specific responsibilities and penalties that will make it difficult to apply in practice. There are also concerns about the potential use of AI regulation for state control purposes. This aspect raises questions about the protection of civil liberties and the limits of state intervention.

In any case, we are facing an important milestone in the regulation of AI. Such a regulatory component is necessary to strike a balance between protecting rights, encouraging innovation and promoting social inclusion. However, the effectiveness of the law will depend on its practical implementation and the ability to mitigate the associated risks. Transparency, regulatory clarity and constant vigilance from civil society will be essential to ensure that the benefits outweigh the challenges.

*Cássio Pantaleoni, Head of AI Solutions and Strategy at Quality Digital

Notice: The opinion presented in this article is the responsibility of its author and not of ABES - Brazilian Association of Software Companies